Last updated: August 2022

In this lesson, you will learn what Kubernetes is, the importance of Kubernetes and Kubernetes Architecture. This is a well detailed document.

Why Kubernetes?

If you are familiar with Docker, Podman, or some other container runtime, you will understand that these container runtimes can actually be used to create and run containers. In other words, these container runtime can be used to run containerized applications, which is fine.

However, when it comes to automation, orchestration, or management of these containerized applications, especially when there are hundreds or thousands of them, it is never easy and is often impossible to use these tools, especially now that heavy applications like core banking applications are being containerized.

Hence the need for an orchestration, automation, and extremely high availability tool that can manage hundreds of thousands of containerized applications. One of those tools is what we are about to learn about now, which is Kubernetes.

What Is Kubernetes

Kubernetes is a container orchestration platform that manages containerized applications. It also does automation of software deployment and can be used to easily scale up an application environment as required.

It was originally developed by Google and is now acquired and owned by CNCF (Cloud Native Computing Foundation), and CNCF is owned by the Linux Foundation.

To proceed in our studies, especially before we can understand the Kubernetes architecture, we need to understand some of the basic Kubernetes terminologies – in other words, Kubernetes components, objects, and features.

Kubernetes Components/Objects

Some of the Kubernetes components to learn in this introductory lesson are;

1. Node

Nodes are just servers. It can either be a physical server, that is, bare metal, or a virtual server.

2. Pod

The pod component is the smallest execution unit in Kubernetes, unlike Docker or Podman, where the smallest unit is the container.

A pod is an abstraction over a container, and it could be an abstraction of containers such as Docker, the CRIO, or the containerd. And the reason pod is used in Kubernetes or is the smallest execution unit is that if you want to switch from Docker to CRIO or containerd, you won’t have any problems doing so because the pod is just an abstraction over the container and the pod is where the applications will run.

So, your container applications will be running inside the pod irrespective of the container runtime you choose to change to.

You should also know that you can have more than one container image in the pod. You can have two container images or even three container images in a pod. Usually it’s always one pod, one container image. But in some cases, depending on your deployment, you may choose to have more than one container running in a pod.

3. Service

Because pods are ephemeral by nature, meaning that a pod can die at any time, and when it dies, of course, it dies with its IP address, and when the pod is being rescheduled and being recreated automatically by Kubernetes, the pod loses its static IP. Hence, other components or applications that are meant to talk to the pod will not find the IP addresses and will not be able to talk to the pod because the pod will have lost its IP addresses. To avoid this problem, Kubernetes uses services.

The static IP address or addresses are assigned to a service instead of a pod, and the service sends the request to the pod. So, the service also acts as a load balancer by load balancing requests and sending them to the appropriate pod depending on the request, and the service can also send requests to pods that are less busy, which is one of the load-balancing features of the Kubernetes service.

There are two types of services; we have the internal service and the external service. The internal service is used by the nodes in the cluster, while the external service is accessible outside of the cluster by external resources.

Now that we understand these basic Kubernetes components, let’s get to understand the architecture of Kubernetes.

Kubernetes Architecture

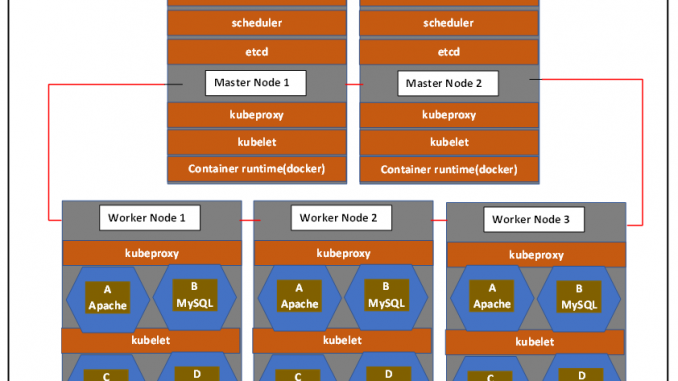

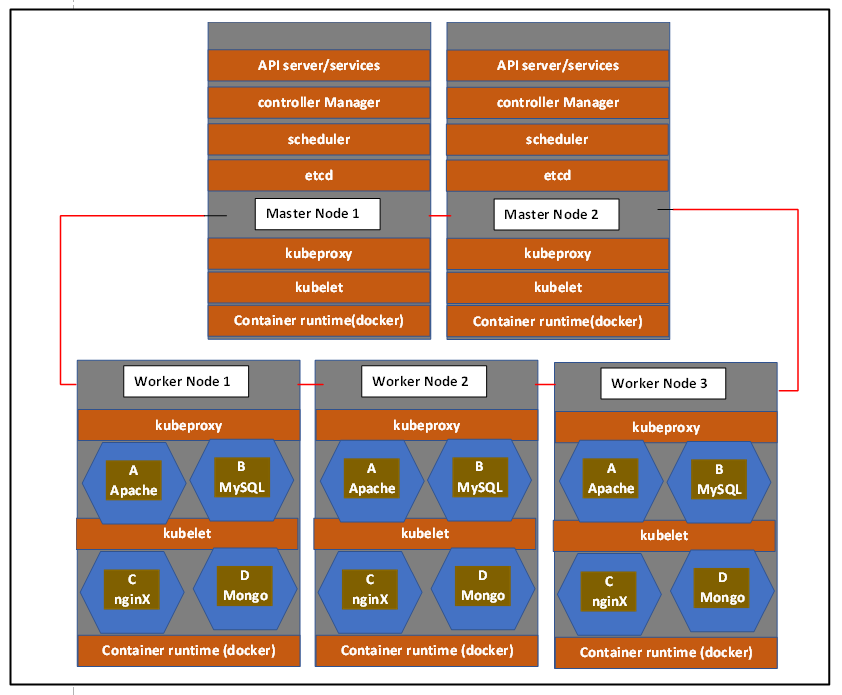

Kubernetes, or rather a Kubernetes cluster, consists of some number of nodes. Of course, clustering means having two or more components or features joined together. In this case, using the Kubernetes Architecture diagram above, we have a number of servers, which can also be called nodes, joined together.

In Kubernetes, we have the master node, which is also called the control plane, and we also have the worker node, which is also called the compute node.

The control plane, or the master node, controls the cluster, while the worker nodes are where your applications will be deployed, basically. Therefore, the worker nodes will apparently have more compute resources than the control plane. For high availability sake, there must be at least two control planes and at least two worker nodes in the cluster.

So let’s talk about the basic services that must be present in those nodes.

One of the services that must be present in a Kubernetes cluster are;

1. Container runtime.

We have different container runtimes that are compatible with Kubernetes, which are Docker, CRIO, and the containerd runtimes . For the architecture above, the container runtime is Docker, and the container runtime is responsible for running the container in the Kubernetes cluster.

2. Kubelet service

The kubelet service on each node interfaces with the container runtime and the node. And because the kubelet communicates with the API services on the master node, every configuration request that comes into the cluster, for example to create and start the pod, the kubelet takes that configuration and performs the action.

Because the kubelet also interfaces with the node, it knows how to take resources from the node. For example, the CPU, memory, etc and assign the resources to every container pod that is started and running in the cluster.

3. Kubeproxy

The kubeproxy is responsible for the network communication within the nodes and among the nodes. If there is a request, the kubeproxy will be the one to forward the request from the Kubernetes service to the pod.

The kubeproxy algorithm works in a way that when a request or call is made on a node, it forwards that call to the particular pod that the call is for inside of the same node where the call was made. And by doing so, the request is delivered faster rather than going to another node because the replica of that pod is on the same node where the request was made.

Going forward, because the control plane controls the Kubernetes cluster and is the master, there are other services that must run on the master node for the Kubernetes cluster to function well, and one of the services that must run on the master node are;

4. API server/service

The API server or API service is the door that allows requests into the cluster—so it’s just like a door that allows you to communicate with the cluster, meaning that if you’re going to interface with Kubernetes, you would have to go through the API server. And to communicate with Kubernetes, you will make use of client tools. You can use tools such as the kubelet, which allows you to run commands like kubectl.

You can also communicate with Kubernetes by using client tools such as the GUI or the Kubernetes API.

5. Scheduler

When the API server authenticates you into the cluster, the scheduler takes over, plans and chooses the nodes to distribute the loads too. It’s very smart and can schedule which node the next component, like a pod, for instance, will be created on. After that, the kubelet takes over from the scheduler and creates, and also starts the component, like a pod, for instance.

So, the scheduler just plans and distributes while the kubelet takes over to do the work.

6. Controller Manager

We can’t have all these high-tech activities going on in the cluster, and there won’t be a service responsible for all of this. This very important service is the controller manager.

The controller manager takes care of the cluster operations, such as noting the changes happening in the cluster. For example, if a pod dies, the controller manager signals the scheduler so that the pod can be rescheduled and created.

The controller manager is the service in charge of knowing what is going on, receiving updates on what is going on in the cluster, and ensuring that everything is working properly.

7. etcd

It is also imperative that all these high-tech activities be stored, and the service that is responsible for storing all the Kubernetes cluster logs is the etcd service. So the etcd service stores all the logs related to everything that has been happening on the cluster, and it stores these logs in the YAML format.

Having understood the Kubernetes architecture, in the next lesson we are going to see step-by-step how Kubernetes can be installed.

Click To Watch Video On Introduction To Kubernetes & Kubernetes Architecture

Kubernetes Architecture

Your feedback is welcomed. If you love others, you will share with others

I like your simple and clear introductory notes on kubernetes. I am interested in learning kubernetes. Just wondering if Tekneed has a complete course on kubernetes that prepares students for the kubernetes certification just like how we have linux notes/tutorials that prepares students for RHCSA and RHCE.

Hi Bechem,

We are working on it. We will let you know when we have the complete material.