Last updated: June 2025

NOTE: for CKA Exam Practice/Preparation Questions and Answers, email us at info@tekneed.com

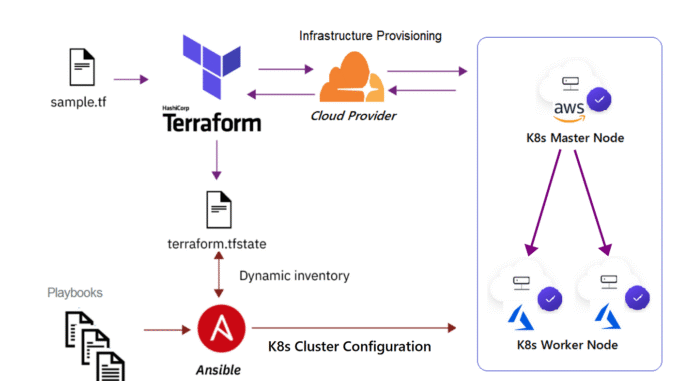

In our previous lesson, we explored the manual deployment of a Kubernetes cluster. In this lesson, we’ll take it a step further by automating the entire process—starting with provisioning virtual machines on the VMware vSphere/vCenter platform using Terraform, and then setting up a self-managed Kubernetes cluster on those VMs using Ansible. (automate the deployment of Kubernetes cluster on premise).

It is also good to note that this process can also be used with Ansible if you are using IaaS on any cloud platform (Azure, AWS, GCP, OCI, etc)

The architecture of this deployment will be the same we used in our previous lesson

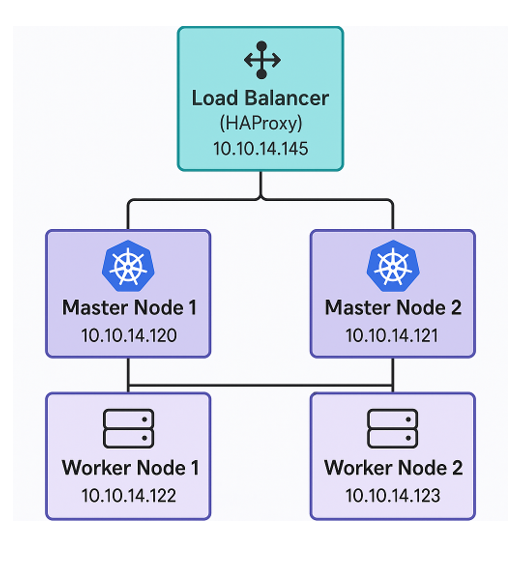

Deployment Topology

In this setup, we’re building a small but production-ready Kubernetes cluster. It will include:

- One (1) Load Balancer

- Two (2) Master (Control Plane) Nodes

- Two (2) Worker Nodes

The Load Balancer (HAProxy) sits in front of the two master nodes and forwards all traffic to the Kubernetes API

You’ll need five servers (virtual or physical). The same approach can be used to scale up to dozens or hundreds of nodes if needed.

Node Layout

All machines will run Ubuntu 24.04 LTS. Below are the IP addresses for each node:

| Role | IP Address |

|---|---|

| Load Balancer | 10.10.14.145 |

| Master Node 1 | 10.10.14.120 |

| Master Node 2 | 10.10.14.121 |

| Worker Node 1 | 10.10.14.122 |

| Worker Node 2 | 10.10.14.123 |

The Load Balancer (HAProxy) sits in front of the two master nodes and forwards all traffic to the Kubernetes API.

Required Ports

Make sure these ports below are opened on all nodes. In any case, this step will also be automated.

| Component | Port(s) | Purpose |

|---|---|---|

| Load Balancer | 6443 | Forwards traffic to Kubernetes API |

| 8404 (optional) | HAProxy monitoring (if enabled) | |

| All Nodes | 6443 | API server communication |

| 10250 | Kubelet – control and monitoring | |

| 30000–32767 | NodePort – for exposed services | |

| Master Nodes only | 2379–2380 | etcd – stores cluster data |

| 10257 | Controller Manager | |

| 10259 | Scheduler |

Before diving into automation, it’s crucial that you have a solid understanding of the underlying technologies. Being able to manually deploy a Kubernetes cluster helps you grasp the finer details involved. A lot of thought went into designing the Ansible playbook, and every decision was intentional. These considerations have been thoroughly explained in the previous lessons. As you go through the playbook, and especially when you watch the accompanying video (link at the end of this lesson), you’ll gain a deeper understanding of why certain tasks and plays were included.

Now that we understand the setup, it’s time to begin the automation process (Automate the deployment of Kubernetes cluster on premise)

We’ll start by provisioning the five virtual machines on the VMware vSphere/vCenter platform using Terraform.

Below is the Terraform script that handles this deployment.

Step By Step Guide of How To Deploy Virtual Machines (VMs) on Vmware Vcenter/Vsphere/ESXI Using Terraform

Step 1

We’ll be using five (5) files, as shown in the directory tree below. The Terraform scripts have been designed to be highly reusable—thanks to Victor@TekNeed.

The only file you’ll need to modify to fit your environment is “terraform.tfvars“. Simply adjust the variables in that file according to your specific setup.

tekneed@Laptop01 ~/IaC/tekneed/vmware-vm/linux-vm-count

$ tree

.

|-- main.tf

|-- output.tf

|-- providers.tf

|-- terraform.tfvars

`-- variables.tfmain.tf

data "vsphere_datacenter" "dc" {

name = var.datacenter

}

data "vsphere_datastore" "datastore" {

name = var.datastore

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_network" "network" {

name = var.network

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_resource_pool" "pool" {

name = var.resource_pool

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_virtual_machine" "template" {

name = var.template_name

datacenter_id = data.vsphere_datacenter.dc.id

}

resource "vsphere_virtual_machine" "vm" {

count = length(var.vm_list)

name = var.vm_list[count.index].name

resource_pool_id = data.vsphere_resource_pool.pool.id

datastore_id = data.vsphere_datastore.datastore.id

num_cpus = var.vm_list[count.index].cpu

memory = var.vm_list[count.index].memory

guest_id = data.vsphere_virtual_machine.template.guest_id

firmware = var.vm_list[count.index].firmware

network_interface {

network_id = data.vsphere_network.network.id

adapter_type = "vmxnet3"

}

disk {

label = "${var.vm_list[count.index].name}.vmdk"

size = var.vm_list[count.index].disk_size

eagerly_scrub = false

thin_provisioned = true

}

clone {

template_uuid = data.vsphere_virtual_machine.template.id

customize {

linux_options {

host_name = var.vm_list[count.index].name

domain = ""

}

network_interface {

ipv4_address = var.vm_list[count.index].ipv4_address

ipv4_netmask = var.vm_list[count.index].ipv4_netmask

dns_server_list = var.dns_servers

}

ipv4_gateway = var.vm_list[count.index].ipv4_gateway

}

}

}

variables.tf

variable "vusername" {

type = string

description = "Username for vCenter"

}

variable "vpassword" {

type = string

description = "Password for vCenter"

sensitive = true

}

variable "vcenter" {

type = string

description = "vCenter server address"

}

variable "datacenter" {

type = string

description = "Name of the vSphere datacenter"

}

variable "resource_pool" {

type = string

description = "Resource pool where the VM will be deployed"

}

variable "datastore" {

type = string

description = "Datastore for the VM"

}

variable "network" {

type = string

description = "Network name for the VM"

}

variable "template_name" {

type = string

description = "Name of the template where the VM will be created from"

}

variable "dns_servers" {

type = list(string)

description = "List of DNS servers"

}

variable "vm_list" {

description = "List of virtual machines to create"

type = list(object({

name = string

ipv4_address = string

ipv4_netmask = number

ipv4_gateway = string

cpu = number

memory = number

disk_size = number

firmware = string

}))

}

terraform.tfvars

#vusername = "john.bull@tekneed.com"

#vpassword = "john.bull's password"

#vcenter = "vcenter FQDN or IP" #EDIT

datacenter = "Tekneed-DR-DC"

resource_pool = "PROD-CLUSTER/Resources/Prod Kubernetes"

datastore = "k8s-Datastore01"

network = "VLAN94"

template_name = "ubuntu-24.04-template2"

dns_servers = ["10.10.10.19", "10.10.10.20"]

vm_list = [

{

name = "haproxylb-01"

ipv4_address = "10.10.14.145"

ipv4_netmask = 24

ipv4_gateway = "10.10.14.40"

cpu = 4

memory = 8000

disk_size = 100

firmware = "efi"

},

{

name = "mastern-01"

ipv4_address = "10.10.14.120"

ipv4_netmask = 24

ipv4_gateway = "10.10.14.40"

cpu = 16

memory = 27000

disk_size = 200

firmware = "efi"

},

{

name = "mastern-02"

ipv4_address = "10.10.14.121"

ipv4_netmask = 24

ipv4_gateway = "10.10.14.40"

cpu = 16

memory = 27000

disk_size = 200

firmware = "efi"

},

{

name = "workern-01"

ipv4_address = "10.10.14.122"

ipv4_netmask = 24

ipv4_gateway = "10.10.14.40"

cpu = 40

memory = 50000

disk_size = 350

firmware = "efi"

},

{

name = "workern-02"

ipv4_address = "10.10.14.123"

ipv4_netmask = 24

ipv4_gateway = "10.10.14.40"

cpu = 40

memory = 50000

disk_size = 350

firmware = "efi"

}

]

providers.tf

provider "vsphere" {

user = var.vusername

password = var.vpassword

vsphere_server = var.vcenter

allow_unverified_ssl = true

}

output.tf

output "vm_names" {

description = "Names of the deployed virtual machines"

value = [for vm in vsphere_virtual_machine.vm : vm.name]

}

output "vm_ipv4_addresses" {

description = "IPv4 addresses of the deployed virtual machines"

value = [for vm in vsphere_virtual_machine.vm : vm.clone[0].customize[0].network_interface[0].ipv4_address]

}

output "vm_power_states" {

description = "Power states of the deployed virtual machines"

value = [for vm in vsphere_virtual_machine.vm : vm.power_state]

}Step 2

Initialize the working directory that contains your Terraform configuration files

# terraform initStep 3

Deploy the Virtual Machines (VMs)

# terrafrom apply

........................

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

vsphere_virtual_machine.vm[4]: Creating...

vsphere_virtual_machine.vm[3]: Creating...

vsphere_virtual_machine.vm[2]: Creating...

vsphere_virtual_machine.vm[1]: Creating...

vsphere_virtual_machine.vm[0]: Creating...

vsphere_virtual_machine.vm[4]: Still creating... [10s elapsed]

.......................

Apply complete! Resources: 5 added, 0 changed, 0 destroyed.

Outputs:

vm_ipv4_addresses = [

"10.10.14.243",

"10.10.14.244",

"10.10.14.245",

"10.10.14.246",

"10.10.14.247",

]

vm_names = [

"haproxylb-01",

"mastern-01",

"mastern-02",

"workern-01",

"workern-02",

]

vm_power_states = [

"on",

"on",

"on",

"on",

"on",

]Handling Sensitive Information in Terraform

To securely pass sensitive information (such as vCenter and domain credentials) to Terraform, environment variables can be used. Terraform automatically loads any environment variable prefixed with TF_VAR_ as input variables.

Example

export TF_VAR_vusername="john.bull@tekneed.com" # vCenterUsername

export TF_VAR_vpassword="your_secure_password" # vCenterPassword

export TF_VAR_admin_password="your_windows_admin_password" # WindowsHostAdminpasswd

export TF_VAR_domain_admin_user="john.bull" # DomainAdminUsername

export TF_VAR_domain_admin_password="your_domain_password" # DomainAdminPassword

export TF_VAR_vcenter="fmdqvcenter.tekneed.com" # vCenterFQDN or IP

Better Practice: Use a Secret Manager

While exporting variables is simple, it’s not ideal for production or CI/CD pipelines due to security risks (e.g., command history, accidental leaks).

A more secure approach is to use a secret management solution, such as:

- Azure Key Vault

- AWS Secrets Manager

- HashiCorp Vault

- GCP Secret Manager

For example, using Azure Key Vault:

- Store secrets (like passwords and usernames) in Key Vault.

- Use tools like

terraform-provider-azurermand Key Vault data source to inject secrets into Terraform securely.

Now that we have our VMs ready, let’s proceed to deploying the Kubernetes cluster using Ansible.

Step By Step Guide of How To Deploy Kubernetes Cluster Using Ansible

For my Ansible setup, the Ansible configuration file I used is below. Your setup may be different.

$ cat ansible.cfg

[defaults]

inventory=~/ansible/linux-inventory

ask_pass=false

remote_user=ansu

collection_paths = /home/ansu/.ansible/collections:/usr/share/ansible/collections

[privilege_escalation]

become=true

become_method=sudo

become_user=root

become_ask_pass=false

The Inventory file I used is below. please note that [localhost_k8s] was used for the Kubernetes credentials other hosts will use

$ cat linux-inventory

[prod_k8s_master]

mastern-01 ansible_host=10.10.14.120

mastern-02 ansible_host=10.10.14.121

[prod_k8s_worker]

workern-01 ansible_host=10.10.14.122

workern-02 ansible_host=10.10.14.123

[prod_k8s_lb]

haproxylb-01 ansible_host=10.10.14.145

[localhost_k8s]

localhost ansible_connection=local

Step 1 – Prepare your playbook

The Ansible playbook that will be used is shown below. (The same step we followed when we deployed this manually)

ansu@101-Ansible-01:~/ansible$ vim deploy-k8s-cluster3

---

## Install & configure Loadbalancer for k8s master nodes ##

- name: Configure HAProxy Load Balancer for Kubernetes master nodes

hosts: prod_k8s_lb

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Install HAProxy

apt:

name: haproxy

state: present

update_cache: yes

- name: Edit/Append HAProxy configuration file

blockinfile:

path: /etc/haproxy/haproxy.cfg

marker: "# {mark} ANSIBLE K8S CONFIG"

block: |

listen stats

bind :8404

stats enable

stats uri /stats

stats refresh 10s

frontend kubernetes

bind 10.10.14.145:6443

option tcplog

mode tcp

default_backend kubernetes-master-nodes

backend kubernetes-master-nodes

mode tcp

balance roundrobin

option tcp-check

server mastern-01 10.10.14.120:6443 check fall 3 rise 2

server mastern-02 10.10.14.121:6443 check fall 3 rise 2

- name: Restart HAProxy

service:

name: haproxy

state: restarted

enabled: yes

- name: Ensure ufw is installed and enabled on load balancer node

apt:

name: ufw

state: present

update_cache: yes

- name: Enable UFW firewall on load balancer node (allow by default)

ufw:

state: enabled

policy: allow

- name: Open required ports on load balancer node

ufw:

rule: allow

port: "{{ item }}"

proto: tcp

loop:

- 8404 #HAProxy stats interface (optional, if enabled in config)

- 6443 #Kubernetes API server

#############################

- name: Prepare all master and worker nodes for Kubernetes

hosts: prod_k8s_master:prod_k8s_worker

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

vars:

containerd_version: "1.7.14"

runc_version: "1.1.12"

crictl_version: "1.29.0" # Use appropriate version for k8s 1.30

tasks:

- name: Ensure ufw is installed and enabled on all kubernetes nodes

apt:

name: ufw

state: present

update_cache: yes

- name: Open common Kubernetes ports on all kubernetes nodes

ufw:

rule: allow

port: "{{ item }}"

proto: tcp

loop:

- 6443 # Kubernetes API server

- 10250 # Kubelet API

- 30000:32767 # NodePort range

- name: Open master-only ports

ufw:

rule: allow

port: "{{ item }}"

proto: tcp

when: "'prod_k8s_master' in group_names"

loop:

- 2379:2380 # etcd

- 10257 # Controller Manager

- 10259 # Scheduler

#### STEP 1 - BEGIN ####

# - name: Disable swap

# shell: |

# swapoff -a

# sed -i '/ swap / s/^/#/' /etc/fstab

- name: Disable swap

command: swapoff -a

when: ansible_swaptotal_mb > 0

- name: Comment out swap entries in /etc/fstab

replace:

path: /etc/fstab

regexp: '^([^#].*\s+swap\s+)'

replace: '# \1'

#### STEP 1 - END ####

#### STEP 2 - BEGIN ####

- name: Ensure the overlay and br_netfilter modules are Loaded at boot time #The copy task writes to /etc/modules-load.d/k8s.conf, which ensures the overlay and br_netfilter modules will be automatically loaded on every boot.

copy:

dest: /etc/modules-load.d/k8s.conf

content: |

overlay

br_netfilter

- name: Load modules immediately

modprobe:

name: "{{ item }}"

state: present

loop:

- overlay

- br_netfilter

#### STEP 2 - END ####

#### STEP 3 - BEGIN ####

- name: Ensure IP forwarding is enabled at the kernel level for Kubernetes

copy:

dest: /etc/sysctl.d/k8s.conf

content: |

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

- name: Apply sysctl parameters immediately

command: sysctl --system

#### STEP 3 - END ####

#### STEP 4 - BEGIN ####

- name: Download and Install prerequisite packages

apt:

name:

- curl

- gnupg

- ca-certificates

- lsb-release

- apt-transport-https

update_cache: yes

#### STEP 4 - END ####

#### STEP 5 - BEGIN ####

#Install container Runtime

## install containerd using the steps below

- name: Download containerd binaries

get_url:

url: "https://github.com/containerd/containerd/releases/download/v{{ containerd_version }}/containerd-{{ containerd_version }}-linux-amd64.tar.gz"

dest: "/tmp/containerd.tar.gz"

timeout: 60 #increase the timeout

- name: Extract the downloaded containerd files

unarchive:

src: /tmp/containerd.tar.gz

dest: /usr/local

remote_src: yes

- name: Ensure systemd directory for containerd exists

file:

path: /usr/local/lib/systemd/system

state: directory

mode: '0755'

- name: Download containerd systemd service file #To make/configure containerd run as a systemd service

get_url:

url: https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

dest: /usr/local/lib/systemd/system/containerd.service

timeout: 60 #increase the timeout

- name: Create containerd config directory

file:

path: /etc/containerd

state: directory

- name: Generate containerd default config (config.toml) & save it to /etc/containerd

shell: "containerd config default | tee /etc/containerd/config.toml"

args:

creates: /etc/containerd/config.toml

- name: Set SystemdCgroup = true in containerd config

replace:

path: /etc/containerd/config.toml

regexp: 'SystemdCgroup = false'

replace: 'SystemdCgroup = true'

- name: Restart containerd with new config

service:

name: containerd

state: restarted

enabled: true

#### STEP 5 - END ####

#### STEP 6 - BEGIN ####

#Install runc

- name: Download runc binary

get_url:

url: "https://github.com/opencontainers/runc/releases/download/v{{ runc_version }}/runc.amd64"

dest: /tmp/runc.amd64

mode: '0755'

timeout: 60

retries: 3

delay: 10

register: download_runc

until: download_runc is succeeded

- name: Install runc

copy:

src: /tmp/runc.amd64

dest: /usr/local/sbin/runc

mode: '0755'

remote_src: yes

#### STEP 6 - END ####

#### STEP 7 - BEGIN ####

##configure crictl to work with containerd##

- name: Check if crictl is already installed #installation of the previous packages may have come with crictl

stat:

path: /usr/local/bin/crictl

register: crictl_binary

# - name: Download crictl

# get_url:

# url: "https://github.com/kubernetes-sigs/cri-tools/releases/download/v{{ crictl_version }}/crictl-v{{ crictl_version }}-linux-amd64.tar.gz"

# dest: "/tmp/crictl.tar.gz"

# timeout: 60

# when: not crictl_binary.stat.exists

# register: download_crictl

# until: download_crictl is succeeded

# retries: 3

# delay: 10

# - name: Extract and install crictl

# unarchive:

# src: "/tmp/crictl.tar.gz"

# dest: /usr/local/bin

# remote_src: yes

# when: not crictl_binary.stat.exists

- name: Create crictl config #To make crictl work with containerd

copy:

dest: /etc/crictl.yaml

content: |

runtime-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

#### STEP 7 - END ####

#### STEP 8 - BEGIN ####

## install kubernetes packages/tools

- name: Ensure APT keyring directory exists # --- ADDED

file:

path: /etc/apt/keyrings

state: directory

mode: '0755'

- name: Download Kubernetes release key if not already present

get_url:

url: https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key

dest: /etc/apt/keyrings/kubernetes-apt-keyring.asc

mode: '0644'

force: no

- name: Convert GPG key to keyring if not already converted

command: >

gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg /etc/apt/keyrings/kubernetes-apt-keyring.asc

args:

creates: /etc/apt/keyrings/kubernetes-apt-keyring.gpg

- name: Add Kubernetes repo

apt_repository:

repo: "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /"

state: present

filename: kubernetes

- name: Install Kubernetes packages/tools

apt:

name:

- kubelet

- kubeadm

- kubectl

state: present

update_cache: yes

register: apt_result

until: apt_result is succeeded

retries: 5

delay: 30

- name: Hold Kubernetes packages

dpkg_selections:

name: "{{ item }}"

selection: hold

loop:

- kubelet

- kubeadm

- kubectl

register: hold_result

until: hold_result is succeeded

retries: 5

delay: 10

# - name: Enable and start kubelet service

# systemd:

# name: kubelet

# enabled: yes

# state: started

#### STEP 8 - END ####

#### STEP 9 - BEGIN ####

#Initialize control plane 1

- name: Initialize Kubernetes control plane on the first master node

hosts: "{{ groups['prod_k8s_master'][0] }}"

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Initialize Kubernetes cluster with kubeadm on the first master node

shell: |

kubeadm init \

--pod-network-cidr=192.168.0.0/16 \

--control-plane-endpoint="10.10.14.145:6443" \

--upload-certs --v=5 | tee /tmp/kubeadm_init.log

args:

creates: /etc/kubernetes/admin.conf

- name: Wait for Kubernetes API server to be ready on port 6443

wait_for:

port: 6443

host: "{{ ansible_host }}"

delay: 10

timeout: 300 #increase the timeout based on your network latency

state: started

- name: Extract kubeadm join token

shell: |

grep -A1 "kubeadm join" /tmp/kubeadm_init.log | grep "kubeadm join" | awk '{print $5}' | head -n 1 | tr -d '\n'

register: kubeadm_token

- name: Debug token

debug:

msg: "Token: {{ kubeadm_token.stdout }}"

- name: Extract discovery-token-ca-cert-hash

shell: grep -o 'sha256:[a-f0-9]\{64\}' /tmp/kubeadm_init.log | head -n 1

#shell: grep -o 'sha256:[a-f0-9]*' /tmp/kubeadm_init.log

#shell: grep -A1 "kubeadm join" /tmp/kubeadm_init.log | grep sha256 | awk '{print $5}'

register: discovery_hash

- name: Extract certificate key

shell: grep -A5 "kubeadm join" /tmp/kubeadm_init.log | grep -- '--certificate-key' | awk '{print $3}'

register: cert_key

- name: Set cluster join variables globally (on localhost)

hosts: localhost

gather_facts: false

tasks:

- name: Set global join fact

set_fact:

load_balancer_endpoint: "10.10.14.145:6443"

join_token: "{{ hostvars[groups['prod_k8s_master'][0]]['kubeadm_token']['stdout'] | regex_replace('\n', '') }}"

discovery_token_hash: "{{ hostvars[groups['prod_k8s_master'][0]]['discovery_hash']['stdout'] }}"

control_plane_cert_key: "{{ hostvars[groups['prod_k8s_master'][0]]['cert_key']['stdout'] }}"

delegate_facts: true

#### STEP 9 - END ####

#### STEP 10 - BEGIN ####

#configures the kubernetes user to communicate with kubernetes cluster using the kubectl command on master nodes

- name: Configure kubeconfig for ansu on master node 1

hosts: "{{ groups['prod_k8s_master'][0] }}"

become: yes

any_errors_fatal: true

tasks:

- name: Ensure .kube directory exists for ansu

file:

path: /home/ansu/.kube

state: directory

owner: ansu

group: ansu

mode: '0755'

become: yes

- name: Copy admin.conf to ansu's kube config

copy:

src: /etc/kubernetes/admin.conf

dest: /home/ansu/.kube/config

remote_src: yes

owner: ansu

group: ansu

mode: '0644'

force: no # <--- This ensures the file is only copied if it doesn't exist or is different

become: yes

#### STEP 10 - END ####

#### STEP 11 - BEGIN ####

- name: Install Calico CNI on master node 1 only

hosts: prod_k8s_master

become: yes

any_errors_fatal: true

tasks:

# - name: Apply Calico CRDs first

# shell: |

# kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/crds.yaml \

# --kubeconfig=/etc/kubernetes/admin.conf --validate=false

# when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Check if Tigera operator is already installed

shell: |

kubectl get deployment tigera-operator -n tigera-operator --kubeconfig=/etc/kubernetes/admin.conf

register: tigera_operator_check

ignore_errors: yes

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Apply Tigera operator manifest (fresh install)

shell: |

kubectl apply --server-side --force-conflicts -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/tigera-operator.yaml \

--kubeconfig=/etc/kubernetes/admin.conf --validate=false --v=5

when:

- inventory_hostname == groups['prod_k8s_master'][0]

- tigera_operator_check.rc != 0

- name: Wait for Calico CRDs to be established with the k8s API server, esp "installations.operator.tigera.io" CRD

shell: |

kubectl get crd installations.operator.tigera.io --kubeconfig=/etc/kubernetes/admin.conf

register: calico_crd_check

retries: 90

delay: 5

until: calico_crd_check.rc == 0

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Wait for Tigera operator deployment to be ready

shell: |

kubectl rollout status deployment tigera-operator -n tigera-operator --timeout=200s \

--kubeconfig=/etc/kubernetes/admin.conf

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Apply Calico custom resources

shell: |

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/custom-resources.yaml \

--kubeconfig=/etc/kubernetes/admin.conf --validate=false

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Wait for Calico pods to be ready

shell: |

kubectl wait --for=condition=Available deployment/calico-kube-controllers -n calico-system --timeout=180s --kubeconfig=/etc/kubernetes/admin.conf

kubectl wait --for=condition=Ready pods -l k8s-app=calico-node -n calico-system --timeout=180s --kubeconfig=/etc/kubernetes/admin.conf

args:

executable: /bin/bash

register: calico_wait

failed_when: calico_wait.rc != 0

when: inventory_hostname == groups['prod_k8s_master'][0]

#### STEP 11 - END ####

#### STEP 12 - BEGIN ####

## Add a second control plane ##

- name: Join additional control plane nodes

hosts: "{{ groups['prod_k8s_master'][1:] }}"

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Debug join variables

debug:

msg:

- "Token: {{ hostvars['localhost']['join_token'] }}"

- "CA Cert Hash: {{ hostvars['localhost']['discovery_token_hash'] }}"

- "Cert Key: {{ hostvars['localhost']['control_plane_cert_key'] }}"

- name: Join the second master node to the kubernetes cluster

shell: |

kubeadm join {{ hostvars['localhost']['load_balancer_endpoint'] }} \

--token {{ hostvars['localhost']['join_token'] }} \

--discovery-token-ca-cert-hash {{ hostvars['localhost']['discovery_token_hash'] }} \

--control-plane \

--certificate-key {{ hostvars['localhost']['control_plane_cert_key'] }} \

--v=5

args:

creates: /etc/kubernetes/kubelet.conf

#### STEP 12 - END ####

#### STEP 13 - BEGIN ####

## Add the worker nodes##

- name: Join worker nodes to the Kubernetes cluster

hosts: prod_k8s_worker

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Join the worker nodes to the cluster

shell: >

kubeadm join {{ hostvars['localhost']['load_balancer_endpoint'] }} \

--token {{ hostvars['localhost']['join_token'] }} \

--discovery-token-ca-cert-hash {{ hostvars['localhost']['discovery_token_hash'] }} \

--v=5

args:

creates: /etc/kubernetes/kubelet.conf

#### STEP 13 - END ####

#### STEP 14 - BEGIN ####

- name: Configure kubeconfig for ansu on master node 2

hosts: "{{ groups['prod_k8s_master'][1] }}"

become: yes

any_errors_fatal: true

tasks:

- name: Ensure .kube directory exists for ansu

file:

path: /home/ansu/.kube

state: directory

owner: ansu

group: ansu

mode: '0755'

become: yes

- name: Copy admin.conf to ansu's kube config

copy:

src: /etc/kubernetes/admin.conf

dest: /home/ansu/.kube/config

remote_src: yes

owner: ansu

group: ansu

mode: '0644'

force: no # <--- This ensures the file is only copied if it doesn't exist or is different

become: yes

#### STEP 14 - END ####

Step 2 – Run the playbook

ansu@101-Ansible-01:~/ansible$ ansible-playbook deploy-k8s-cluster3

PLAY [Configure HAProxy Load Balancer for Kubernetes master nodes] ******************************************************************************************

TASK [Gathering Facts] **************************************************************************************************************************************

-----------------------

Step 3 – From any of your master nodes or if you have kubectl installed on your Ansible controller, verify that the cluster is up

ansu@mastern-01:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

mastern-01 Ready control-plane 6d22h v1.29.15

mastern-02 Ready control-plane 6d21h v1.29.15

workern-01 Ready <none> 6d21h v1.29.15

workern-02 Ready <none> 6d21h v1.29.15

EXTRA 1

The playbook can also be adapted to use the Kubernetes module. Below is an alternative version. I haven’t tested it myself yet, so if you try it, feel free to share your experience in the comments section below.

---

## Install & configure Loadbalancer for k8s master nodes ##

- name: Configure HAProxy Load Balancer for Kubernetes master nodes

hosts: prod_k8s_lb

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Install HAProxy

apt:

name: haproxy

state: present

update_cache: yes

- name: Edit/Append HAProxy configuration file

blockinfile:

path: /etc/haproxy/haproxy.cfg

marker: "# {mark} ANSIBLE K8S CONFIG"

block: |

listen stats

bind :8404

stats enable

stats uri /stats

stats refresh 10s

frontend kubernetes

bind 10.10.14.145:6443

option tcplog

mode tcp

default_backend kubernetes-master-nodes

backend kubernetes-master-nodes

mode tcp

balance roundrobin

option tcp-check

server mastern-01 10.10.14.120:6443 check fall 3 rise 2

server mastern-02 10.10.14.121:6443 check fall 3 rise 2

- name: Restart HAProxy

service:

name: haproxy

state: restarted

enabled: yes

- name: Ensure ufw is installed and enabled on load balancer node

apt:

name: ufw

state: present

update_cache: yes

- name: Enable UFW firewall on load balancer node (allow by default)

ufw:

state: enabled

policy: allow

- name: Open required ports on load balancer node

ufw:

rule: allow

port: "{{ item }}"

proto: tcp

loop:

- 8404 #HAProxy stats interface (optional, if enabled in config)

- 6443 #Kubernetes API server

#############################

- name: Prepare all master and worker nodes for Kubernetes

hosts: prod_k8s_master:prod_k8s_worker

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

vars:

containerd_version: "1.7.14"

runc_version: "1.1.12"

crictl_version: "1.29.0" # Use appropriate version for k8s 1.30

tasks:

- name: Ensure ufw is installed and enabled on all kubernetes nodes

apt:

name: ufw

state: present

update_cache: yes

- name: Open common Kubernetes ports on all kubernetes nodes

ufw:

rule: allow

port: "{{ item }}"

proto: tcp

loop:

- 6443 # Kubernetes API server

- 10250 # Kubelet API

- 30000:32767 # NodePort range

- name: Open master-only ports

ufw:

rule: allow

port: "{{ item }}"

proto: tcp

when: "'prod_k8s_master' in group_names"

loop:

- 2379:2380 # etcd

- 10257 # Controller Manager

- 10259 # Scheduler

#### STEP 1 - BEGIN ####

# - name: Disable swap

# shell: |

# swapoff -a

# sed -i '/ swap / s/^/#/' /etc/fstab

- name: Disable swap

command: swapoff -a

when: ansible_swaptotal_mb > 0

- name: Comment out swap entries in /etc/fstab

replace:

path: /etc/fstab

regexp: '^([^#].*\s+swap\s+)'

replace: '# \1'

#### STEP 1 - END ####

#### STEP 2 - BEGIN ####

- name: Ensure the overlay and br_netfilter modules are Loaded at boot time #The copy task writes to /etc/modules-load.d/k8s.conf, which ensures the overlay and br_netfilter modules will be automatically loaded on every boot.

copy:

dest: /etc/modules-load.d/k8s.conf

content: |

overlay

br_netfilter

- name: Load modules immediately

modprobe:

name: "{{ item }}"

state: present

loop:

- overlay

- br_netfilter

#### STEP 2 - END ####

#### STEP 3 - BEGIN ####

- name: Ensure IP forwarding is enabled at the kernel level for Kubernetes

copy:

dest: /etc/sysctl.d/k8s.conf

content: |

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

- name: Apply sysctl parameters immediately

command: sysctl --system

#### STEP 3 - END ####

#### STEP 4 - BEGIN ####

- name: Download and Install prerequisite packages

apt:

name:

- curl

- gnupg

- ca-certificates

- lsb-release

- apt-transport-https

update_cache: yes

#### STEP 4 - END ####

#### STEP 5 - BEGIN ####

#Install container Runtime

## install containerd using the steps below

- name: Download containerd binaries

get_url:

url: "https://github.com/containerd/containerd/releases/download/v{{ containerd_version }}/containerd-{{ containerd_version }}-linux-amd64.tar.gz"

dest: "/tmp/containerd.tar.gz"

timeout: 60 #increase the timeout

- name: Extract the downloaded containerd files

unarchive:

src: /tmp/containerd.tar.gz

dest: /usr/local

remote_src: yes

- name: Ensure systemd directory for containerd exists

file:

path: /usr/local/lib/systemd/system

state: directory

mode: '0755'

- name: Download containerd systemd service file #To make/configure containerd run as a systemd service

get_url:

url: https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

dest: /usr/local/lib/systemd/system/containerd.service

timeout: 60 #increase the timeout

- name: Create containerd config directory

file:

path: /etc/containerd

state: directory

- name: Generate containerd default config (config.toml) & save it to /etc/containerd

shell: "containerd config default | tee /etc/containerd/config.toml"

args:

creates: /etc/containerd/config.toml

- name: Set SystemdCgroup = true in containerd config

replace:

path: /etc/containerd/config.toml

regexp: 'SystemdCgroup = false'

replace: 'SystemdCgroup = true'

- name: Restart containerd with new config

service:

name: containerd

state: restarted

enabled: true

#### STEP 5 - END ####

#### STEP 6 - BEGIN ####

#Install runc

- name: Download runc binary

get_url:

url: "https://github.com/opencontainers/runc/releases/download/v{{ runc_version }}/runc.amd64"

dest: /tmp/runc.amd64

mode: '0755'

timeout: 60

retries: 3

delay: 10

register: download_runc

until: download_runc is succeeded

- name: Install runc

copy:

src: /tmp/runc.amd64

dest: /usr/local/sbin/runc

mode: '0755'

remote_src: yes

#### STEP 6 - END ####

#### STEP 7 - BEGIN ####

##configure crictl to work with containerd##

- name: Check if crictl is already installed #installation of the previous packages may have come with crictl

stat:

path: /usr/local/bin/crictl

register: crictl_binary

# - name: Download crictl

# get_url:

# url: "https://github.com/kubernetes-sigs/cri-tools/releases/download/v{{ crictl_version }}/crictl-v{{ crictl_version }}-linux-amd64.tar.gz"

# dest: "/tmp/crictl.tar.gz"

# timeout: 60

# when: not crictl_binary.stat.exists

# register: download_crictl

# until: download_crictl is succeeded

# retries: 3

# delay: 10

# - name: Extract and install crictl

# unarchive:

# src: "/tmp/crictl.tar.gz"

# dest: /usr/local/bin

# remote_src: yes

# when: not crictl_binary.stat.exists

- name: Create crictl config #To make crictl work with containerd

copy:

dest: /etc/crictl.yaml

content: |

runtime-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

#### STEP 7 - END ####

#### STEP 8 - BEGIN ####

## install kubernetes packages/tools

- name: Ensure APT keyring directory exists # --- ADDED

file:

path: /etc/apt/keyrings

state: directory

mode: '0755'

- name: Download Kubernetes release key if not already present # --- ADDED

get_url:

url: https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key

dest: /etc/apt/keyrings/kubernetes-apt-keyring.asc

mode: '0644'

force: no

- name: Convert GPG key to keyring if not already converted # --- ADDED

command: >

gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg /etc/apt/keyrings/kubernetes-apt-keyring.asc

args:

creates: /etc/apt/keyrings/kubernetes-apt-keyring.gpg

- name: Add Kubernetes repo

apt_repository:

repo: "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.29/deb/ /"

state: present

filename: kubernetes

- name: Install Kubernetes packages/tools

apt:

name:

- kubelet

- kubeadm

- kubectl

state: present

update_cache: yes

- name: Hold Kubernetes packages

dpkg_selections:

name: "{{ item }}"

selection: hold

loop:

- kubelet

- kubeadm

- kubectl

register: hold_result

until: hold_result is succeeded

retries: 5

delay: 10

# - name: Enable and start kubelet service

# systemd:

# name: kubelet

# enabled: yes

# state: started

#### STEP 8 - END ####

#### STEP 9 - BEGIN ####

#Initialize control plane 1

- name: Initialize Kubernetes control plane on the first master node

hosts: "{{ groups['prod_k8s_master'][0] }}"

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Initialize Kubernetes cluster with kubeadm on the first master node

shell: |

kubeadm init \

--pod-network-cidr=192.168.0.0/16 \

--control-plane-endpoint="10.10.14.145:6443" \

--upload-certs --v=5 | tee /tmp/kubeadm_init.log

args:

creates: /etc/kubernetes/admin.conf

- name: Wait for Kubernetes API server to be ready on port 6443

wait_for:

port: 6443

host: "{{ ansible_host }}"

delay: 10

timeout: 300 #increase the timeout based on your network latency

state: started

- name: Extract kubeadm join token

shell: grep -A1 "kubeadm join" /tmp/kubeadm_init.log | grep "kubeadm join" | awk '{print $5}'

register: kubeadm_token

- name: Extract discovery-token-ca-cert-hash

shell: grep -A1 "kubeadm join" /tmp/kubeadm_init.log | grep sha256 | awk '{print $5}'

register: discovery_hash

- name: Extract certificate key

shell: grep -A5 "kubeadm join" /tmp/kubeadm_init.log | grep -- '--certificate-key' | awk '{print $3}'

register: cert_key

- name: Set cluster join variables globally (on localhost)

hosts: localhost

gather_facts: false

tasks:

- set_fact:

load_balancer_endpoint: "10.10.14.145:6443"

join_token: "{{ hostvars[groups['prod_k8s_master'][0]]['kubeadm_token']['stdout'] }}"

discovery_token_hash: "{{ hostvars[groups['prod_k8s_master'][0]]['discovery_hash']['stdout'] }}"

control_plane_cert_key: "{{ hostvars[groups['prod_k8s_master'][0]]['cert_key']['stdout'] }}"

#### STEP 9 - END ####

#### STEP 10 - BEGIN ####

#configures the kubernetes user to communicate with kubernetes cluster using the kubectl command on master nodes

- name: Configure kubeconfig for ansu on master node 1

hosts: "{{ groups['prod_k8s_master'][0] }}"

become: yes

any_errors_fatal: true

tasks:

- name: Ensure .kube directory exists for ansu

file:

path: /home/ansu/.kube

state: directory

owner: ansu

group: ansu

mode: '0755'

become: yes

- name: Copy admin.conf to ansu's kube config

copy:

src: /etc/kubernetes/admin.conf

dest: /home/ansu/.kube/config

remote_src: yes

owner: ansu

group: ansu

mode: '0644'

force: no # <--- This ensures the file is only copied if it doesn't exist or is different

become: yes

- name: Ensure pip3 is installed on master node 1

ansible.builtin.package:

name: python3-pip

state: present

- name: Ensure kubernetes Python client is installed on master node 1

ansible.builtin.pip:

name: kubernetes

executable: pip3

#### STEP 10 - END ####

#### STEP 11 - BEGIN ####

- name: Install Calico CNI on master node 1 only

hosts: prod_k8s_master

become: yes

any_errors_fatal: true

vars:

kubeconfig_path: /etc/kubernetes/admin.conf

tigera_operator_manifest: https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/tigera-operator.yaml

calico_cr_manifest: https://raw.githubusercontent.com/projectcalico/calico/v3.27.3/manifests/custom-resources.yaml

tasks:

- name: Check if Tigera operator is already installed

kubernetes.core.k8s_info:

api_version: apps/v1

kind: Deployment

name: tigera-operator

namespace: tigera-operator

kubeconfig: "{{ kubeconfig_path }}"

register: tigera_operator_check

ignore_errors: yes

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Apply Tigera operator manifest (fresh install or update)

kubernetes.core.k8s:

state: present

src: "{{ tigera_operator_manifest }}"

kubeconfig: "{{ kubeconfig_path }}"

validate_certs: no

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Wait for Installation CRD to be registered

shell: |

for i in {1..10}; do

kubectl get crd installations.operator.tigera.io --kubeconfig={{ kubeconfig_path }} && break || sleep 3

done

changed_when: false

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Wait for Tigera operator deployment to be available

kubernetes.core.k8s_info:

api_version: apps/v1

kind: Deployment

name: tigera-operator

namespace: tigera-operator

kubeconfig: "{{ kubeconfig_path }}"

register: tigera_deploy

until: tigera_deploy.resources[0].status.availableReplicas | default(0) > 0

retries: 10

delay: 12

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Check if Calico Installation custom resource exists

shell: |

kubectl get Installation default -o name --kubeconfig={{ kubeconfig_path }}

register: calico_cr_check

ignore_errors: yes

changed_when: false

when: inventory_hostname == groups['prod_k8s_master'][0]

- name: Apply Calico custom-resources.yaml (fresh install only)

kubernetes.core.k8s:

state: present

src: "{{ calico_cr_manifest }}"

kubeconfig: "{{ kubeconfig_path }}"

validate_certs: no

when:

- inventory_hostname == groups['prod_k8s_master'][0]

- calico_cr_check.rc != 0

- name: Wait for critical Calico pods to be ready

shell: |

kubectl wait --for=condition=Ready pod -l k8s-app=calico-kube-controllers -n calico-system --timeout=180s --kubeconfig={{ kubeconfig_path }} &&

kubectl wait --for=condition=Ready pod -l k8s-app=calico-typha -n calico-system --timeout=180s --kubeconfig={{ kubeconfig_path }} &&

kubectl wait --for=condition=Ready pod -l k8s-app=calico-node -n calico-system --timeout=180s --kubeconfig={{ kubeconfig_path }}

when: inventory_hostname == groups['prod_k8s_master'][0]

#### STEP 11 - END ####

#### STEP 12 - BEGIN ####

## Add a second control plane ##

- name: Join additional control plane nodes

hosts: "{{ groups['prod_k8s_master'][1:] }}"

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Join the node to the control plane

shell: |

kubeadm join {{ hostvars['localhost']['load_balancer_endpoint'] }} \

--token {{ hostvars['localhost']['join_token'] }} \

--discovery-token-ca-cert-hash {{ hostvars['localhost']['discovery_token_hash'] }} \

--control-plane \

--certificate-key {{ hostvars['localhost']['control_plane_cert_key'] }} \

--v=5

args:

creates: /etc/kubernetes/kubelet.conf

#### STEP 12 - END ####

#### STEP 13 - BEGIN ####

## Add the worker nodes##

- name: Join worker nodes to the Kubernetes cluster

hosts: prod_k8s_worker

become: yes

any_errors_fatal: true #force Ansible to stop for all nodes if any one node fails

tasks:

- name: Join the node to the cluster

shell: |

kubeadm join {{ hostvars['localhost']['load_balancer_endpoint'] }} \

--token {{ hostvars['localhost']['join_token'] }} \

--discovery-token-ca-cert-hash {{ hostvars['localhost']['discovery_token_hash'] }} \

--v=5

args:

creates: /etc/kubernetes/kubelet.conf

#### STEP 13 - BEGIN ####

#### STEP 14 - BEGIN ####

- name: Configure kubeconfig for ansu on master node 2

hosts: "{{ groups['prod_k8s_master'][1] }}"

become: yes

any_errors_fatal: true

tasks:

- name: Ensure .kube directory exists for ansu

file:

path: /home/ansu/.kube

state: directory

owner: ansu

group: ansu

mode: '0755'

become: yes

- name: Copy admin.conf to ansu's kube config

copy:

src: /etc/kubernetes/admin.conf

dest: /home/ansu/.kube/config

remote_src: yes

owner: ansu

group: ansu

mode: '0644'

force: no # <--- This ensures the file is only copied if it doesn't exist or is different

become: yes

#### STEP 14 - END ####

Extra 2 (Terraform Script for Linux VM Installation on VMware Vcenter/Vsphere/ESXI without Using count)

victor@Laptop-01 ~/IaC/tekneed-vm/vmware-vm/linux-vm (iac01)

$ tree

.

|-- backend.tf

|-- main.tf

|-- output.tf

|-- providers.tf

|-- terraform.tfvars

`-- variables.tf

0 directories, 6 filesmain.tf

data "vsphere_datacenter" "dc" {

name = var.datacenter

}

data "vsphere_datastore" "datastore" {

name = var.datastore

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_network" "network" {

name = var.network

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_resource_pool" "pool" {

name = var.resource_pool

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_virtual_machine" "template" {

name = var.template_name

datacenter_id = data.vsphere_datacenter.dc.id

}

resource "vsphere_virtual_machine" "vm" {

name = var.vm_name

resource_pool_id = data.vsphere_resource_pool.pool.id

datastore_id = data.vsphere_datastore.datastore.id

num_cpus = var.vm_cpu

memory = var.vm_memory

guest_id = data.vsphere_virtual_machine.template.guest_id

firmware = var.vm_firmware

#scsi_type = data.vsphere_virtual_machine.template.scsi_type

network_interface {

network_id = data.vsphere_network.network.id

adapter_type = "vmxnet3"

}

disk {

label = "${var.vm_name}.vmdk"

size = var.vm_disk_size

eagerly_scrub = false

thin_provisioned = true

}

clone {

template_uuid = data.vsphere_virtual_machine.template.id

customize {

linux_options {

host_name = var.vm_name

domain = "" # Set an empty string to bypass the requirement

}

network_interface {

ipv4_address = var.vm_ipv4_address

ipv4_netmask = var.vm_ipv4_netmask

dns_server_list = var.dns_servers

}

ipv4_gateway = var.vm_ipv4_gateway

}

}

# extra_config = {

# "guestinfo.metadata" = base64encode(templatefile("${path.module}/cloud-init.tpl", { ssh_public_key = var.ssh_public_key }))

# "guestinfo.metadata.encoding" = "base64"

# }

}

variables.tf

variable "vusername" {

type = string

description = "Username for vCenter"

}

variable "vpassword" {

type = string

description = "Password for vCenter"

sensitive = true

}

variable "vcenter" {

type = string

description = "vCenter server address"

}

variable "datacenter" {

type = string

description = "Name of the vSphere datacenter"

}

variable "resource_pool" {

type = string

description = "Resource pool where the VM will be deployed"

}

variable "datastore" {

type = string

description = "Datastore for the VM"

}

variable "network" {

type = string

description = "Network name for the VM"

}

variable "vm_name" {

type = string

description = "Name of the Virtual Machine"

}

variable "template_name" {

type = string

description = "Name of the template where the VM will be crated from"

}

variable "vm_ipv4_address" {

type = string

description = "Static IPv4 address of the VM"

}

variable "vm_ipv4_gateway" {

type = string

description = "Default IPv4 gateway"

}

variable "vm_firmware" {

type = string

description = "Firmware type (bios or efi)"

}

variable "vm_ipv4_netmask" {

type = number

description = "Subnet mask (in CIDR format)"

}

variable "vm_cpu" {

type = number

description = "Number of CPUs"

}

variable "vm_memory" {

type = number

description = "Memory size in MB"

}

variable "vm_disk_size" {

type = number

description = "Disk size in GB"

}

variable "dns_servers" {

type = list(string)

description = "List of DNS servers"

}

#variable "vm_domain" {

# type = string

# description = "Domain name for the VM"

# default = "localdomain"

#}

#variable "sudo_user" {

# type = string

# description = "Sudo user for VM login"

# default = "ansu"

#}

#variable "sudo_password" {

# type = string

# description = "Password for sudo user"

# sensitive = true

#}

terraform.tfvars

##########PROD###########

#vusername = "john.bull@tekneed.com"

#vpassword = "john.bull's password"

#vcenter = "vcenter FQDN or IP"

datacenter = "Tekneed-PR-DC" #EDIT

resource_pool = "Tekneed-CLSTR/Resources/Production VMs" #EDIT

datastore = "datastore01" #EDIT

network = "VLAN94" #EDIT

vm_name = "mastern-01" #EDIT

template_name = "ubuntu24.04-template2"

vm_firmware = "efi"

#vm_domain = "tekneed.com" #EDIT

vm_ipv4_address = "10.10.10.13" #EDIT

vm_ipv4_netmask = 24

vm_ipv4_gateway = "10.10.10.1" #EDIT

vm_cpu = 4 #EDIT

vm_memory = 16384 #Memory size in MB #EDIT

vm_disk_size = 500 #Disk size in GB #EDIT

dns_servers = ["10.10.10.34", "10.10.10.35"] #EDIT

#sudo_user = "sudo-user"

#sudo_password = "SuperSecurePassword"providers.tf

provider "vsphere" {

user = var.vusername

password = var.vpassword

vsphere_server = var.vcenter

allow_unverified_ssl = true

}

output.tf

output "vm_name" {

value = vsphere_virtual_machine.vm.name

}

output "vm_ip" {

value = vsphere_virtual_machine.vm.default_ip_address

}backend.tf

terraform {

backend "azurerm" {

resource_group_name = "tekneed_AutoRG" # Can be passed via `-backend-config=`"resource_group_name=<resource group name>"` in the `init` command.

storage_account_name = "tekneedautostorage" # Can be passed via `-backend-config=`"storage_account_name=<storage account name>"` in the `init` command.

container_name = "tekneed-linux-vmwarevm" # Can be passed via `-backend-config=`"container_name=<container name>"` in the `init` command.

key = "tekneed-linux-vmwarevm.tfstate" #Name of the terraform tfstate file # Can be passed via `-backend-config=`"key=<blob key name>"` in the `init` command.

}

}Extra 3 (Terraform Script for Windows VM Installation On VMware Vcenter/Vsphere/ESXI without Using Count)

victor@Laptop01 MINGW64 ~/IaC/tekneed-vm/vmware-vm/windows-vm (iac01)

$ tree

.

|-- backend.tf

|-- main.tf

|-- output.tf

|-- providers.tf

|-- terraform.tfvars

`-- variables.tf

0 directories, 6 filesmain.tf

data "vsphere_datacenter" "dc" {

name = var.datacenter

}

data "vsphere_datastore" "datastore" {

name = var.datastore

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_network" "network" {

name = var.network

datacenter_id = data.vsphere_datacenter.dc.id

}

data "vsphere_resource_pool" "pool" {

name = var.resource_pool

datacenter_id = data.vsphere_datacenter.dc.id

}

#Using just cluster instead of resource pool

#data "vsphere_compute_cluster" "cluster" {

# name = var.compute_cluster

# datacenter_id = data.vsphere_datacenter.dc.id

#}

data "vsphere_virtual_machine" "template" {

name = var.template_name

datacenter_id = data.vsphere_datacenter.dc.id

}

resource "vsphere_virtual_machine" "vm" {

name = var.vm_name

resource_pool_id = data.vsphere_resource_pool.pool.id

datastore_id = data.vsphere_datastore.datastore.id

num_cpus = var.vm_cpu

memory = var.vm_memory

guest_id = data.vsphere_virtual_machine.template.guest_id

firmware = var.vm_firmware

scsi_type = data.vsphere_virtual_machine.template.scsi_type

network_interface {

network_id = data.vsphere_network.network.id

adapter_type = "vmxnet3"

}

disk {

label = "${var.vm_name}.vmdk"

size = var.vm_disk_size

eagerly_scrub = false

thin_provisioned = true

}

clone {

template_uuid = data.vsphere_virtual_machine.template.id

customize {

windows_options {

computer_name = var.computer_name

admin_password = var.admin_password

join_domain = var.domain_name

domain_admin_user = var.domain_admin_user

domain_admin_password = var.domain_admin_password

}

network_interface {

ipv4_address = var.vm_ipv4_address

ipv4_netmask = var.vm_ipv4_netmask

dns_server_list = var.dns_servers

}

ipv4_gateway = var.vm_ipv4_gateway

}

}

}

variables.tf

variable "vusername" {

type = string

description = "Username for vCenter"

sensitive = true

}

variable "vpassword" {

type = string

description = "Password for vCenter"

sensitive = true

}

variable "vcenter" {

type = string

description = "vCenter server address"

sensitive = true

}

variable "datacenter" {

type = string

description = "Name of the vSphere datacenter"

}

variable "resource_pool" {

type = string

description = "Resource pool where the VM will be deployed"

}

#using just cluster instead of resource pool

#variable "compute_cluster" {

# type = string

# description = "Compute cluster name"

#}

variable "datastore" {

type = string

description = "Datastore for the VM"

}

variable "network" {

type = string

description = "Network name for the VM"

}

variable "vm_name" {

type = string

description = "Name of the Virtual Machine"

}

variable "computer_name" {

type = string

description = "Windows hostname"

}

variable "template_name" {

type = string

description = "Name of the template where the VM will be created from"

}

variable "vm_ipv4_address" {

type = string

description = "Static IPv4 address of the VM"

}

variable "vm_ipv4_gateway" {

type = string

description = "Default IPv4 gateway"

}

variable "vm_firmware" {

type = string

description = "Firmware type (bios or efi)"

}

variable "vm_ipv4_netmask" {

type = number

description = "Subnet mask (in CIDR format)"

}

variable "vm_cpu" {

type = number

description = "Number of CPUs"

}

variable "vm_memory" {

type = number

description = "Memory size in MB"

}

variable "vm_disk_size" {

type = number

description = "Disk size in GB"

}

variable "admin_password" {

type = string

description = "Administrator password"

sensitive = true

}

variable "domain_name" {

type = string

description = "Domain name to join"

}

variable "domain_admin_user" {

type = string

description = "Domain administrator username"

sensitive = true

}

variable "domain_admin_password" {

type = string

description = "Domain administrator password"

sensitive = true

}

variable "dns_servers" {

type = list(string)

description = "List of DNS servers"

}

terraform.tfvars

###########PROD##########

#vusername = "john.bull@tekneed.com"

#vpassword = "password"

#vcenter = "vcenter FQDN or IP"

datacenter = "tekneed-PR-DC" #EDIT

#compute_cluster = "PROD-CLUSTER"

resource_pool = "PROD-CLUSETR/Resources/Production VMs" #EDIT

datastore = "datastore-01"

network = "VLAN94" #EDIT

vm_name = "mastern-02" #VM name #EDIT

computer_name = "mastern-02" # Windows Host Name #EDIT

template_name = "win2025-template" #EDIT

vm_firmware = "efi"

vm_cpu = 8 #EDIT

vm_memory = 8096 #Memory size in MB #EDIT

vm_disk_size = 100 #Disk size in GB #EDIT

vm_ipv4_address = "10.10.10.138" #EDIT

vm_ipv4_netmask = 24

vm_ipv4_gateway = "10.10.10.1" #EDIT

#admin_password = "password"

domain_name = "tekneed.com" #EDIT

#domain_admin_user = "tekneed\\john.bull"

#domain_admin_password = "password"

dns_servers = ["10.10.10.133", "10.10.10.134"] #EDIT

providers.tf

provider "vsphere" {

user = var.vusername

password = var.vpassword

vsphere_server = var.vcenter

allow_unverified_ssl = true

}output.tf

output "vm_name" {

value = vsphere_virtual_machine.vm.name

}

output "vm_ip" {

value = vsphere_virtual_machine.vm.default_ip_address

}backend.tf

terraform {

backend "azurerm" {

resource_group_name = "tekneed_AutoRG" # Can be passed via `-backend-config=`"resource_group_name=<resource group name>"` in the `init` command.

storage_account_name = "tekneedautostorage" # Can be passed via `-backend-config=`"storage_account_name=<storage account name>"` in the `init` command.

container_name = "tekneed-windows-vmwarevm" # Can be passed via `-backend-config=`"container_name=<container name>"` in the `init` command.

key = "tekneed-windows-vmwarevm.tfstate" #Name of the terraform tfstate file # Can be passed via `-backend-config=`"key=<blob key name>"` in the `init` command.

}

}

Leave a Reply